Unreliability and UI automated tests often go hand in hand. This blog covers various considerations to keep in mind while developing automated tests to avoid such situations.

Most SAAS products are built as monoliths, which rely heavily on regression testing to release any changes. When regression testing is involved, teams are often forced to maintain a bulky suite of automated tests.

In the case of monolithic services, initially teams aim to build a super effective suite of automated UI tests, expecting that it will reduce the effort of manual testing and allow them to release faster.

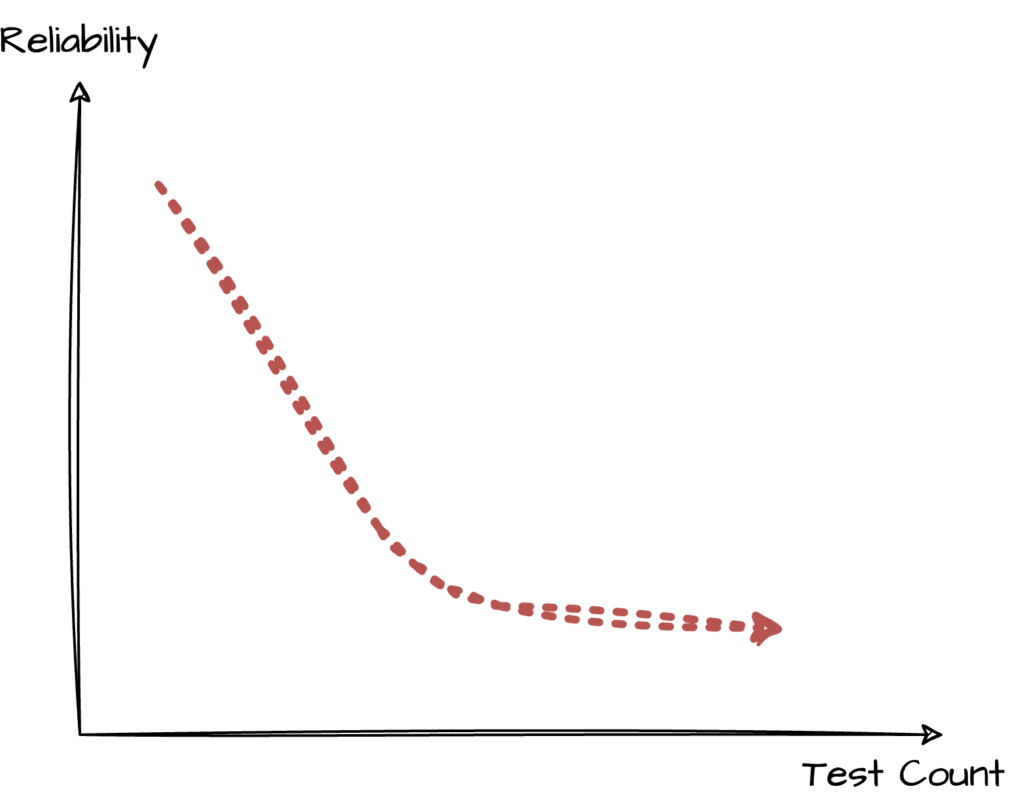

However, over time, the situation often becomes opposite.

As the UI automated tests scale, it starts showing inconsistency. They fail randomly. Eventually, team spending significant effort on manual testing as well as debugging the randomly failed tests to understand the problem.

[Read, how to fix most common testing problem]

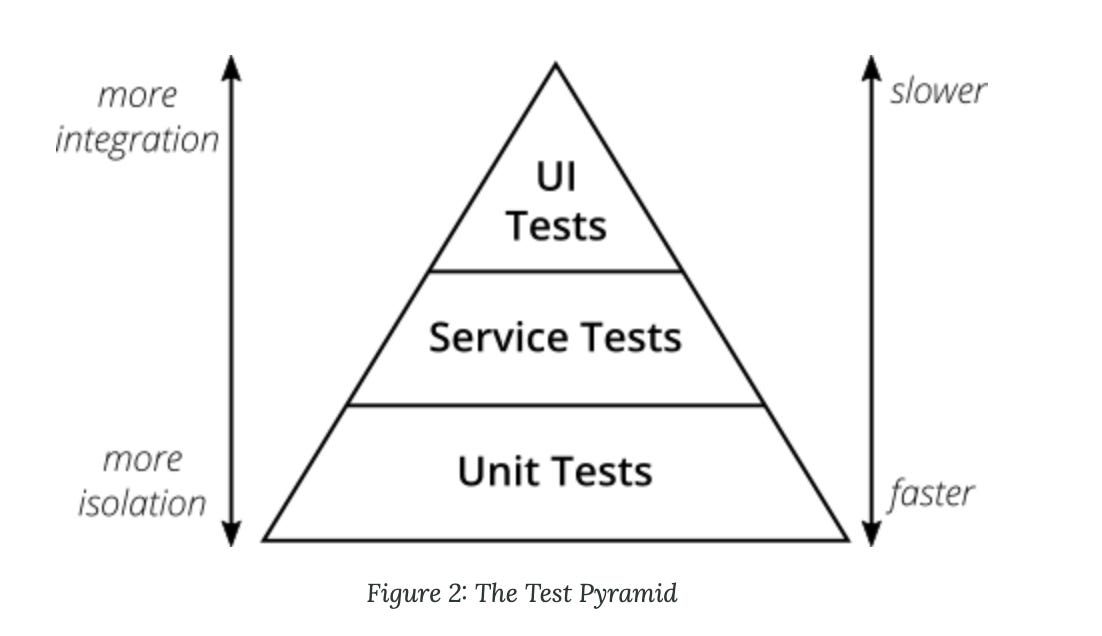

To ensure consistency in UI automated tests, one widely accepted strategy in the microservices world is to follow the pyramid shape of tests. In this approach, the majority of tests are covered as unit, integration, and component tests, with only a few tests at the UI level.

However, achieving the perfect pyramid requires teams to strategize their application code to support a variety of tests at lower levels such as unit, integration, and component tests. Teams that are late in strategising the pyramid eventually opt for UI-based automation and include every possible test scenario as part of their UI automated test suite.

This blog discusses the various considerations to keep in mind when you decide to go with the anti-pyramid approach and aim to add most of the tests at the UI level.

Robust Test Data Setup Process

Generally UI tests are tightly coupled with test data. Any small problem with the test data setup can cause all the tests depending on it to fail. It is highly important to have a robust process to set up the test data. Here are a few guidelines to keep this step free from errors:

- Database Snapshots and Dumps: Explore options like database snapshots and dumps to set up the test data instead of utilising Selenium scripts for this purpose.

- REST APIs: Use REST APIs to set up the prerequisites for a test, as they provide a reliable and efficient way to handle test data.

- Test Independence: Ensure that each test can create the test data it requires. Tests should not depend on the test data modified or created by another test. This ensure test independence and reduces the risk of cascading failures.

- Test Data Cleanup: Generally, QAs are inclined to include a test data cleanup step within the test so that other tests can reuse the data. However, I suggest that test data cleanup should not be part of the test itself. Instead, it should be managed through database-level operations or APIs. To achieve test independence, it is crucial to ensure that tests are not dependent on the cleanup steps of other tests.

Ensuring Consistency in Parallel Test Execution

Initially, you might not be planning to run the tests in parallel but as the test count grows this becomes a need to get the faster result.

In many of the projects where I worked, one common problem I experienced was that tests running in a single thread, in sequential order, produced consistent results. However, when the same tests were run in parallel on different threads, they often failed.

Parallel execution caused overlapping operations, resulting in different behaviours than expected.

This factor can easily be overlooked when you have more than 10-15 tests.

There is no fixed guideline to overcome this issue. Depending on the situation, you can try different approaches to address the problem of overlapping tests. Some strategies to consider:

- Tagging the tests that can run in one thread.

- Breaking the whole suite of tests into smaller sub-suites. Sub-suites can run in parallel while entire suite running the sub-suites sequentially.

- Using 2-3 tenants to run the tests in parallel.

Stable Infrastructure

A robust infrastructure is another vital factor in preventing intermittent test failures. One common problem I’ve experienced is treating the infrastructure as a playground while tests are running. To mitigate this issue, it’s important to ensure the team is aware when a test run is ongoing, so they don’t toggle features that could impact the current execution.

Implementing an automated notification in the team’s channel when tests start running can help address this issue effectively.

Consistent Wait Conditions

UI tests heavily depend on waits. Having effective waits is crucial for tests to be consistent. Tools like Playwright and Cypress provide great alternatives to explicit waits, allowing you to wait for API calls to finish. Tools lacking this capability rely on waiting for elements to be visible, which might not be consistent over time.

Appropriate Locators

Excessive use of absolute XPaths can make tests fragile, as any change in the DOM structure can cause the tests to fail. Instead, prefer using relative XPaths and attributes that rarely change.

A poor example of locator:

/div/span/div/span[@class="price order-total"]Hybrid Approach to automate use cases

API + UI = Use JourneyAn hybrid approach to add coverage for business cases is another recommended way of doing testing at UI level.

For example, a login flow which has already been tested by UI in one of the tests should be replaced by API call in all other tests. It removes the possibility of failing during the login process.

Similarly, if you have already covered a test which adds an item to the cart. The other tests involving this step should add item to the cart via API.

This method increases consistency by relying on the only those UI components which you actually want to test.

Subscribe to my newsletter. Follow me on Substack and Medium.

Thank you for reading this post, don't forget to subscribe!

No responses yet